Although the sense that we are experiencing a singularity-like event with computers and the world wide web is visceral, the current concept of a singularity is not the best explanation for this transformation in progress.

Singularity is a term borrowed from physics to describe a cataclysmic threshold in a black hole. In the canonical usage, it defines the moment an object being pulled into a black hole passes a point beyond which nothing about it, including information, can escape. In other words, although an object’s entry into a black hole is steady and knowable, once it passes this discrete point absolutely nothing about its future can be known. This disruption on the way to infinity is called a singular event — a singularity.

Mathematician and science fiction author Vernor Vinge applied this metaphor to the acceleration of technological change. The power of computers has been increasing at an exponential rate with no end in sight, which led Vinge to an alarming conclusion. In his analysis, at some point not too far away, innovations in computer power would enable us to design computers more intelligent than we are; these smarter computers could design computers yet smarter than themselves, and so on, the loop of computers-making-newer-computers accelerating very quickly towards unimaginable levels of intelligence. This progress in IQ and power, when graphed, generates a rising curve that appears to approach the straight-up vertical limit of infinity. In mathematical terms it resembles the singularity of a black hole, because, as Vinge announced, it will be impossible to know anything beyond this threshold. If we make an Artificial Intelligence (or AI), which in turn makes a greater AI, ad infinitum, then their futures are unknowable to us, just as our lives are unfathomable to a slug. So the singularity becomes, in a sense, a black hole, an impenetrable veil hiding our future from us.

Ray Kurzweil, a legendary inventor and computer scientist, seized on this metaphor and has applied it across a broad range of technological frontiers. He demonstrated that this kind of exponential acceleration isn’t unique to computer chips; it’s happening in most categories of innovation driven by information, in fields as diverse as genomics, telecommunications and commerce. The technium itself is accelerating its rate of change. Kurzweil found that if you make a very crude comparison between the processing power of neurons in human brains and the processing powers of transistors in computers, you could map out the point at which computer intelligence will exceed human intelligence, and thus predict when the crossover singularity would happen. Kurzweil calculates that the singularity will happen around 2040. Since that, relatively, seems like tomorrow, Kurzweil announced with great fanfare that the “Singularity is near.” In the meantime, everything is racing to a point that we cannot see or conceive beyond.

Though, by definition, we cannot know what will be on the other side of the singularity, Kurzweil and others believe that our human minds, at least, will become immortal because we’ll be able to download, migrate, or eternally repair them with our collective brainpower. Our minds (that is, ourselves) will continue on with or without our upgraded bodies. The singularity, then, becomes a portal or bridge to the future. All you have to do is live up to the singularity in 2040. If you make it, you’ll become immortal.

I’m not the first person to point out the many similarities between the singularity and the Rapture. The parallels are so close that some critics call the singularity “the spike” to hint at that decisive moment of fundamentalist Christian apocalypse. At the Rapture, when Jesus returns, all believers will suddenly be lifted out their ordinary lives and ushered directly into heavenly immortality without experiencing death. This singular event will produce repaired bodies, intact minds full of eternal wisdom, and is scheduled to happen “in the near future.” This hope is almost identical to the techno-Rapture of the singularity. It is worth trying to unravel the many assumptions built into the Kurzweilian version of singularity, because despite its oft-misleading nature, some aspects of the technological singularity do capture the dynamic of technological change.

First, immortality is in no way ensured by a singularity of AI, for any number of reasons: Our “selves” may not be very portable, engineered eternal bodies may not be very appealing, nor may super-intelligence alone be enough to solve the problem of overcoming bodily death.

Second, intelligence may or may not be infinitely expandable from our present point. Since we can imagine a manufactured intelligence greater than ours, we think that we possess enough intelligence to pull off this trick of bootstrapping, but in order to reach a singularity of ever-increasing AI, we have to be smart enough not only to create a greater intelligence, but also to make one that is able to move to the next level. A chimp is hundreds of times smarter than an ant, but the greater intelligence of a chimp is not smart enough to make a mind smarter than itself. In other words, not all intelligences are capable of bootstrapping intelligence. We might call a mind capable of imagining another type of intelligence but incapable of replicating itself a “Type 1” mind. Following this classification, a “Type 2” mind would be an intelligence capable of replicating itself (making artificial minds) but incapable of making one substantially smarter. A “Type 3” mind, then, would be capable of creating advanced enough intelligence to make another generation even smarter. We assume our human minds are Type 3, but it remains an assumption. It is possible that our minds are Type 1, or that greater intelligence may have to evolve slowly, rather than be bootstrapped to an instant singularity.

Third, the notion of a mathematical singularity is illusionary, as any chart of exponential growth will show. Like many of Kurzweil’s examples, an exponential can be plotted linearly so that the chart shows the growth taking off like a rocket. It can also be plotted, however, on a log-log graph, which has the exponential growth built into the graph’s axis. In this case, the “takeoff” is a perfectly straight line. Kurzweil’s website has scores of graphs, all showing straight-line exponential growth headed towards a singularity. But any log-log graph of a function will show a singularity at Time 0 — now. If something is growing exponentially, the point at which it will appear to rise to infinity will always be “just about now.”

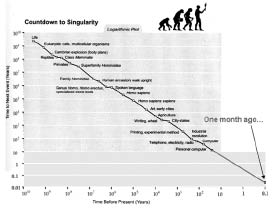

Look at Kurzweil’s chart of the exponential rate at which major events occur in the world, called “Countdown to Singularity.” Figure 1 It displays a beautiful laser straight rush across millions of years of history.

If you continue the curve to the present day, however, rather than cutting it off 30 years ago, it shows something strange. Kevin Drum, a fan and critic of Kurzweil who writes for the Washington Monthly, extended this chart to the present. Figure 2

Surprisingly, it suggests the singularity is now; more weirdly, it suggests that the view would have looked the same at almost any time along the curve. If Benjamin Franklin, an early Kurzweil type, had mapped out the same graph in the 1700s, his graph would have suggested that the singularity was happening right then, right now! The same would have happened at the invention of radio, or the appearance of cities, or at any point in history since — as the straight line indicates — the “curve,” or rate, is the same anywhere along the line.

Switching chart modes doesn’t help. If you define the singularity as the near-vertical asymptote you get when you plot an exponential progression on a linear chart, then you’ll get that infinite slope at any arbitrary end point along the exponential progression. That means that the singularity is “near” at any end point along the time line, as long as you are in exponential growth. The singularity is simply a phantom that materializes anytime you observe exponential acceleration retrospectively. These charts correctly demonstrate that exponential growth extends back to the beginning of the cosmos, meaning that for millions of years the singularity was just about to happen! In other words, the singularity is always “near,” has always been “near,” and will always be “near.”

We could broaden the definition of intelligence to include evolution, which is, after all, a type of learning. In this case, we could say that intelligence has been bootstrapping itself with smarter stuff all along, making itself smarter, ad infinitum. There is no discontinuity in this conception, nor any discrete points to map.

Fourth, and most importantly, technological transitions represented by the singularity are completely imperceptible from within the transition inaccurately represented by a singularity. A phase shift from one level to the next is only visible from the perch of the new level — after arrival there. Compared to a neuron, the mind is a singularity. It is invisible and unimaginable to the lower, inferior parts. From the perspective of a neuron, the movement from a few neurons, to many neurons, to an alert mind, seems a slow, continuous journey of gathering neurons. There is no sense of disruption — of Rapture. This discontinuity can only be seen in retrospect.

Language is a singularity of sorts, as is writing. Nevertheless, the path to both of these was continuous and imperceptible to the acquirers. I am reminded of a great story that a friend tells of cavemen sitting around the campfire 100,000 years ago, chewing on the last bits of meat, chatting in guttural sounds. Their conversation goes something like this:

— “Hey, you guys, we are TALKING!”

— “What do you mean ‘TALKING?’ Are you finished with that bone?”

— “I mean, we are SPEAKING to each other! Using WORDS. Don’t you get it?”

— “You’ve been drinking that grape stuff again, haven’t you?”

— “See, we are doing it right now!”

— “Doing what?”

As the next level of organization kicks in, the current level is incapable of perceiving the new level, because that perception must take place retrospectively. The coming phase shift is real, but it will be imperceptible during the transition. Sure, things will speed up, but that will only hide the real change, which is a change in the structural rules of the game. We can expect in the next hundred years that life will appear to be ordinary and not discontinuous, certainly not cataclysmic. All the while, something new will be gathering, and we’ll slowly recognize that we’ve acquired the tools to perceive new tools present — and we’ll have had them for some time.

When I mentioned this to emerging digital technology specialist and digerati Esther Dyson, she reminded me that we have an experience close to the singularity every day: “It’s called waking up. Looking backwards, you can understand what happens, but in your dreams you are unaware that you could become awake.”

In a thousand years from now, all the 11-dimensional charts of the time will show that “the singularity is near.” Immortal beings, global consciousness and everything else we hope for in the future may be real, but a linear-log curve in 30076 will show that a singularity approaches. The singularity is not a discrete event. It’s a continuum woven into the very warp of extropic systems. It is a traveling mirage that moves along with us, as life and the technium accelerate their evolution.

Ray Kurzweil writes in response:

Allow me to clarify the metaphor implied by the term “singularity.” The metaphor implicit in the term “singularity” as applied to future human history is not to a point of infinity, but rather to the event horizon surrounding a black hole. Densities are not infinite at the event horizon but merely large enough that it is difficult to see past them from outside.

I say “difficult” rather than impossible, because the Hawking radiation emitted from the event horizon is likely to be quantum entangled with events inside the black hole, so there may be ways of retrieving the information. This was the concession made recently by Stephen Hawking. However, without getting into the details of this controversy, it is fair to say that seeing past the event horizon is difficult — impossible from the perspective of classical physics — because the gravity of the black hole is strong enough to prevent classical information from inside the black hole from escaping.

We can, however, use our intelligence to infer what life is like inside the event horizon, even though seeing past the event horizon is effectively blocked. Similarly, we can use our intelligence to make meaningful statements about the world after the historical singularity, but seeing past this event horizon is difficult because of the profound transformation that it represents.

Therefore, discussions of infinity are irrelevant. You are correct in your statement that exponential growth is smooth and continuous. From a mathematical perspective, an exponential looks the same everywhere and this applies to the exponential growth of the power (as expressed in price-performance, capacity, bandwidth, etc.) of information technologies. However, despite being smooth and continuous, exponential growth is nonetheless explosive once the curve reaches transformative levels. Consider the Internet. When the ARPANET went from 10,000 nodes to 20,000 in one year, and then to 40,000 and then 80,000, it was of interest only to a few thousand scientists. When ten years later it went from 10 million nodes to 20 million, and then 40 million and 80 million, the appearance of this curve looked identical (especially when viewed on a log-log plot), but the consequences were profoundly more transformative. There is a point in the smooth exponential growth of these different aspects of information technology when they transform the world as we know it.

You cite the extension made by Kevin Drum of the log-log plot that I provide of key paradigm shifts in biological and technological evolution (which appears on page 17 of Singularity is Near). This extension is utterly invalid. You cannot extend in this way a log-log plot for just the reasons you cite. The only straight line that is valid to extend on a log plot is a straight line representing exponential growth when the time axis is on a linear scale and the value (such as price-performance) is on a log scale. In this case, you can extend the progression, but even here you have to make sure that the paradigms to support this ongoing exponential progression are available and will not saturate. That is why I discuss at length the paradigms that will support ongoing exponential growth of both hardware and software capabilities. It is not valid to extend the straight line when the time axis is on a log scale. The only point of these graphs is that there has been acceleration in paradigm shift in biological and technological evolution. If you want to extend this type of progression, then you need to put time on a linear x-axis and the number of years (for the paradigm shift or for adoption) as a log value on the y-axis. Then it may be valid to extend the chart. I have a chart like this on page 50 of my book.

This acceleration is a key point. These charts show that technological evolution emerges smoothly from the biological evolution that created the technology-creating species. You mention that an evolutionary process can create greater complexity — and greater intelligence — than existed prior to the process. And it is precisely that intelligence-creating process that will go into hyper drive once we can master, understand, model, simulate, and extend the methods of human intelligence through reverse-engineering it and applying these methods to computational substrates of exponentially expanding capability.

That chimps are just below the threshold needed to understand their own intelligence is a result of the fact that they do not have the prerequisites to create technology. There were only a few small genetic changes, comprising a few tens of thousands of bytes of information, which distinguish us from our primate ancestors: a bigger skull, a larger cerebral cortex, and a workable opposable appendage. There were a few other changes that other primates share to some extent, such as mirror neurons and spindle cells.

As I pointed out in my Long Now talk, a chimp’s hand looks similar to a human’s, but the pivot point of the thumb does not allow facile manipulation of the environment. In contrast, our human ability to look inside the human brain and model, simulate and recreate the processes we encounter has already been demonstrated. The scale and resolution of these simulations will continue to expand exponentially. I make the case that we will reverse-engineer the principles of operation of the several hundred information processing regions of the human brain within about twenty years and then apply these principles (along with the extensive tool kit we are creating through other means in the AI field) to computers that will be many times (by the 2040s, billions of times) more powerful than needed to simulate the human brain.

You write, “Kurzweil found that if you make a very crude comparison between the processing power of neurons in human brains and the processing powers of transistors in computers, you could map out the point at which computer intelligence will exceed human intelligence.” That is an oversimplification of my analysis. I provide in my book four different approaches to estimating the amount of computation required to simulate all regions of the human brain based on actual functional recreations of brain regions. These all come up with answers in the same range, from 1014 to 1016 cps for creating a functional recreation of all regions of the human brain, so I’ve used 10^16 cps as a conservative estimate.

This refers only to the hardware requirement. As noted above, I have an extensive analysis of the software requirements. While reverse-engineering the human brain is not the only source of intelligent algorithms (and, in fact, has not been a major source at all until recently, as we didn’t have scanners that could see into the human brain with sufficient resolution), however, my analysis of reverse-engineering the human brain is along the lines of an existence proof that we will have the software methods underlying human intelligence within a couple of decades.

Another important point in this analysis is that the complexity of the design of the human brain is about a billion times simpler than the actual complexity we find in the brain. This is due to the brain (like all biology) being a probabilistic recursively expanded fractal. This discussion goes beyond what I can write here, although it is in the book. We can ascertain the complexity of the human brain’s design, because the design is contained in the genome. I show that the genome (including non-coding regions) only has about 30 to 100 million bytes of compressed information in it due to the massive redundancies in the genome.

In summary, I agree that the singularity is not a discrete event. A single point of infinite growth or capability is not the metaphor being applied. Yes, the exponential growth of all facts of information technology is smooth, but it is nonetheless explosive and transformative.